The “Headless Fatty” Haunts knownwell

If you missed the introduction to this series about GLP-1s and product insights in digital health, you can read it here.

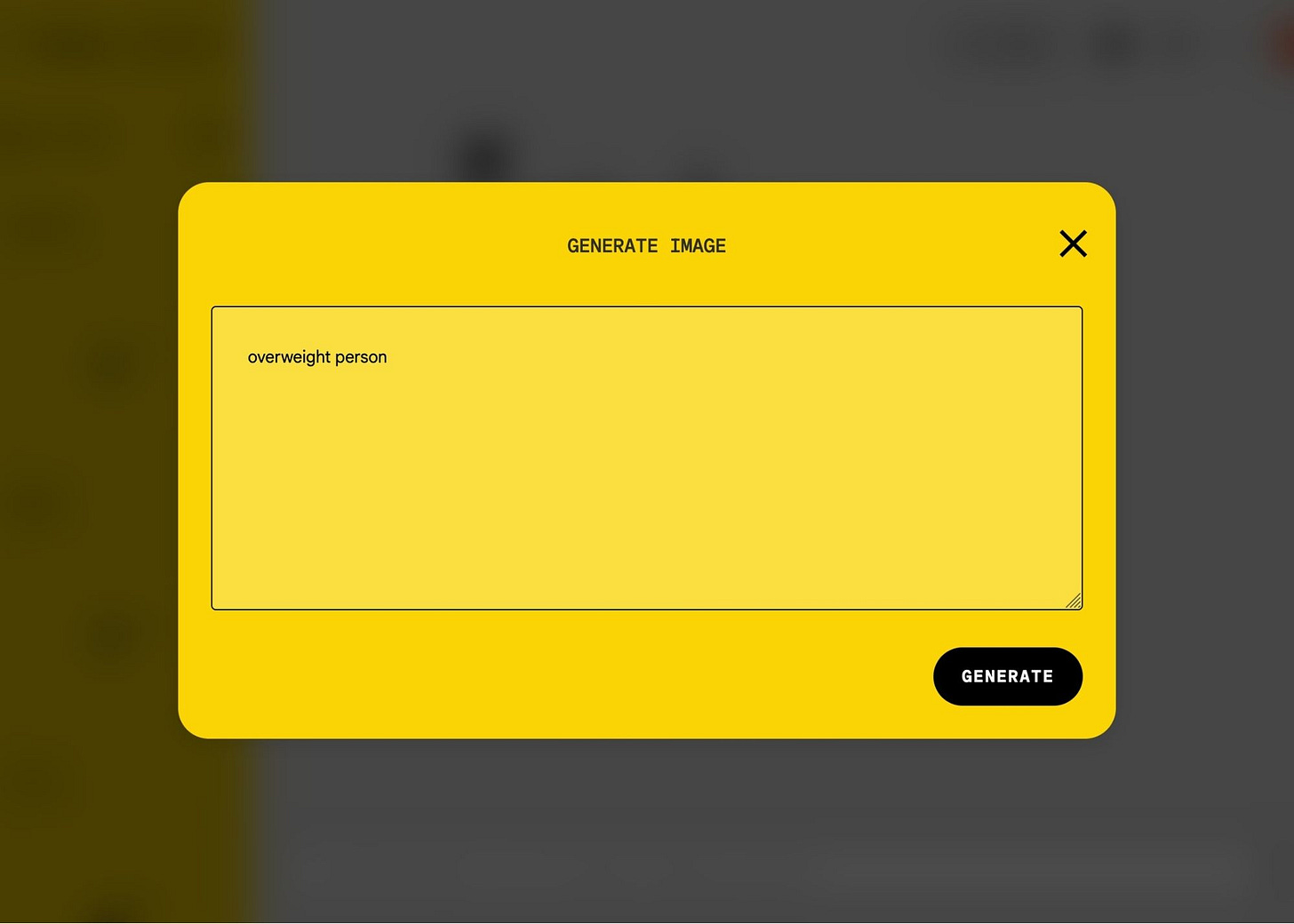

I decided to try Google’s new image generation tool Whisk. I asked it to generate an image of an overweight person. However, the image it generated wasn’t aligned with my expectations, so I spontaneously decided to do a little experiment. I repeated the query seven additional times. Only once did Whisk generate an image that included the person’s face.

This phenomenon, where only the torsos or bodies of people who are overweight or obese are depicted without showing their faces, is an example of weight stigma, or anti-fat bias. It’s known as the “headless fatty” trope. Researchers have shown that these stigmatizing images — faceless, not fully clothed — contribute to negative attitudes towards people who are overweight or obese.

Of course, the AI tool is merely spitting out images similar to those it’s been trained on, and unfortunately, the “headless fatty” trope continues to be a problem in the media today. Look no further than this interview with Angela Fitch, Co-founder and Chief Medical Officer of knownwell. Although the startup advertises “obesity medicine that is refreshingly free of bias, blame, and shame,” the B-roll used during the interview completely undermines this vision.

Empathy is one of the most crucial elements in effective product design. When a product is designed with empathy, it doesn’t just solve a problem, it resonates with users on an emotional level, building trust and loyalty. Yet this can evaporate in an instant, negating the team’s good intentions and effectively erasing their hard work.

Even though knownwell likely wasn’t consulted about the B-roll footage, digital health companies focused on obesity medicine need to be especially protective of their reputation. When trust is broken, even a product carefully built on a foundation of empathy is at risk of faltering in a competitive market.